From plagiarised books, to puffed-up Popes.. Credit: Canva

AI can create stories, images, videos, and viral moments in seconds- but not without backlash. AI raises many complex ethical questions: Who is responsible for what’s fake? Can we trust what we see? Is it inspiration or theft? In Europe, regulations are tightening around the neck of AI-generated content, since it is now the center of debates regarding ethics, misinformation, and our digital safety.

Here are 5 cases that show what happens when AI goes too far.

Ghibli-Style AI-Art on Instagram

A popular art tool was released earlier this year, where users can create beautiful imagery that is the exact style of Studio Ghibli. Within a week, Instagram was flooded with these images, and many of us thought they were from real animators, while others assumed they were concept art.

The backlash was swift:

- Fans attributed this to the theft of style

- Artists warned that it devalued their hand-drawn work

- The Ghibli co-founder Hayao Miyazaki called AI, stating it was “an insult to life itself”.

This triggered debates about artistic copyright and raised a question: Are AI copies considered a creative theft?

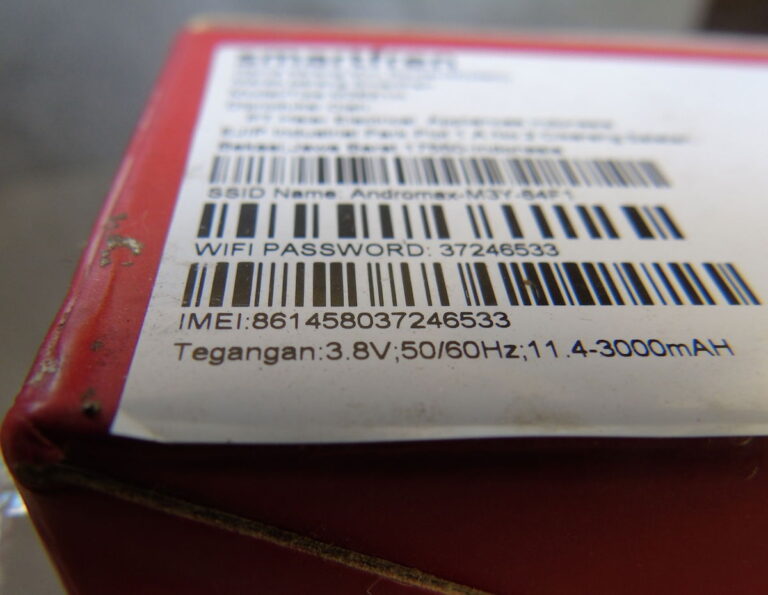

The Pope in a Puffer Jacket

Honestly, it was hard to tell if this one was fake. In early 2023, a hyper-realistic image of the late Pope Francis wearing a white Balenciaga puffer coat was shared. It went viral on Twitter, and the image was realistic enough that people thought it was a paparazzi image.

The twist?

Completely fabricated, made with a popular AI image tool called MidJourney. Reactions varied from laughter to panic, with public figures and journalists calling it a “wake-up call” because of how easily a believable fake can be spread.

It demonstrated how rapidly misinformation can circulate and become indistinguishable from genuine public figures.

Deepfakes Enter EU Elections

During 2024, local election campaigns across Europe took place, and a few deepfake video and audio clips of politicians made the rounds online.

Example: Slovakia’s Election Deepfake

Slovakia’s very own Michal Šimečka, a leader of the progressive party. Footage emerged showcasing recordings that falsely depicted Michal conspiring with Journalist Monika Tódová to manipulate and overturn election results and, most importantly, raise beer prices. It went viral, with many voters believing it was genuine. These clips were traced back to political groups trying to discredit their opponents.

This event reminds us that stronger election protections & and AI-labeling are vital.

Children’s Books Swarm Amazon

In late 2024, Hundreds of unoriginal “children’s books” swarmed Amazon listings. Many of these books in question were written by AI, and buyers started to notice the warning signs: conflicting morals, recycled content, plagiarised plots, strange phrasing, and dialogue. Worse, some of those books included incorrect cultural references and insensitive content.

For parents, this was a red flag, citing bigger concerns about the quality control and ethical standards of self-publishing.

Fake News Articles Written By AI

In 2024, investigative journalists from Poland and Germany discovered that several websites included churned-out news articles entirely written by AI. No editors, No sources, and No accountability.

The articles imitated real outlets in both tone and layout, making it difficult for casual readers like me to distinguish them from one another. Because fact-checking, especially regarding news, is essential, as misinformation is on the rise at an alarming rate. Countries like Spain are responding with new laws and regulations that require AI content to be labeled clearly.

Why This Matters

These controversies show us how AI is shaping the future of media, and it’s not always for the better. Whether it is art theft, political misinformation, or forged books, the line between fake and real is becoming blurred, as tools get better, the risks increase. The EU AI Act is set to bring restrictions across all its member states. But for now, one thing is clear: If it looks too real to be valid.. It probably is.