Meta AI is violating the privacy of people who are not even on any of its social media platforms | Credits: Shutterstock

Meta Chief Executive Mark Zuckerberg called it “the most intelligent AI assistant that you can freely use.” Yet Barry Smethurst, 41, a record shop worker from Saddleworth, encountered a far less intelligent episode he described as “terrifying”.

Stranded on a platform waiting for a morning train to Manchester Piccadilly, Barry thought he would rely on Meta’s brand‑new WhatsApp AI assistant to get help. Instead, he ended up with a personal phone number he had no right to.

When the chatbot confidently delivered a mobile contact for TransPennine Express customer services, Barry dialled, expecting helpful train staff. Instead, a bewildered woman on the line from Oxfordshire—170 miles away—answered, saying her number had never been public nor associated with a transit operator. The humiliation went both ways.

Barry realised too late that he was calling someone with no connection to his journey, while the woman in Oxfordshire became the unwitting target of frustrated travellers seeking train updates.

And then, Barry tried again, but the chatbox failed again and connected him with yet another private number of a non-WhatsApp user. On this occasion, with James Grey, a property executive from Oxfordshire, as The Guardian reported in an article about WhatsApp’s AI feature.

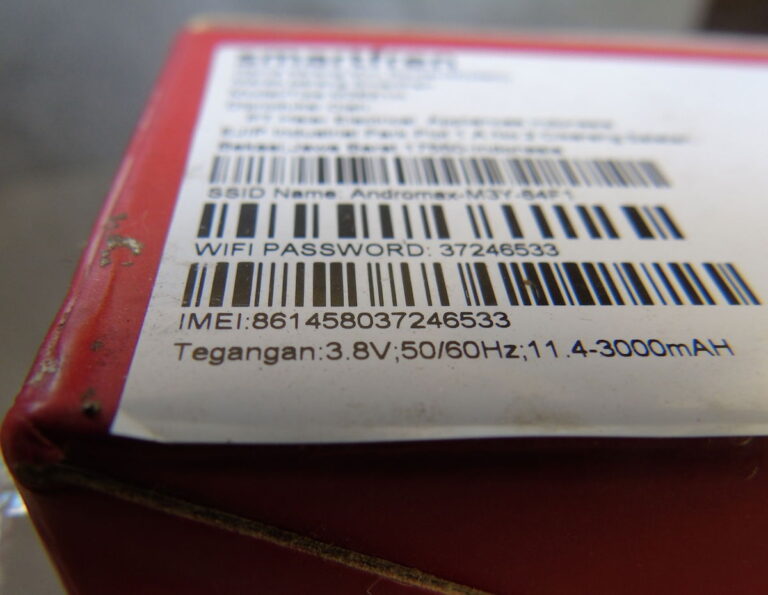

Violating privacy of people not even on WhatsApp

Meta’s WhatsApp AI assistant had, quite literally, made a private phone number public for a second time, demonstrating that even the most confident chatbot can get it horribly wrong.

The gross mistake has reignited conversations about the reliability, privacy safeguards, trustworthiness, and corporate responsibility of artificial intelligence. Zuckerberg’s AI, which millions of users can access now, is positioned as a public boon: an intelligent helper accessible to every WhatsApp user.

Asked if Zuckerberg’s claim that the AI was “the most intelligent”, Gray said: “That has been thrown into doubt in this instance.”

And for Barry, it proved to be anything but. “It’s terrifying,” Barry Smethurst said, after he raised a complaint with Meta. “If they made up the number, that’s more acceptable, but the overreach of taking an incorrect number from some database it has access to is particularly worrying.”

Stronger controls needed

While Meta has defended its assistant as a secure and practical innovation, internal documents now show some of the limitations baked into the model. AI systems often scrape data from the web and internal databases, but the assistant is meant to filter sensitive personal data. In this case, the filtering failed, resulting in the disclosure of private information to strangers.

Meta could have avoided the incident by introducing stronger controls around personal data, by verifying answers before release, or by flagging answers with uncertainty rather than delivering them with confidence.

This is not the first time Meta’s AI has stumbled. From chatbots inventing quotes to suggesting disallowed actions, the company continues grappling with the fundamental challenge of trust. Training algorithms to say “I don’t know” rather than inventing details—or to properly anonymise personal information—remains as vital as building the models in the first place.

Meta’s AI helper operates in a high‑stakes context. WhatsApp supports the everyday lives of billions of people, helping them stay in touch with friends and family. It also helps people organise themselves in communities, and it assists businesses serving customers and customer service.

The last thing people want is an AI assistant quietly sharing personal phone numbers or other sensitive information. The company now faces pressure to reinforce filters, audit data access points, and commit to user safety as non‑negotiable.

Increasing regulatory pressure

Meta and other AI language models, including GPT-3, ChatGPT, and others, are facing increasing regulatory pressure. Across Europe and the UK, lawmakers are debating frameworks such as the Digital Services Act and the AI Act.

Even in the United States, privacy advocates are pushing for more explicit rules around data collection and liability, while in the blockchain space, web3 natives are pushing for data decentralisation. They advocate for returning data control to users, allowing them to store, secure, and share it as needed, and monetise it if they wish to.

When private numbers find their way into public conversations—even via misfired AI assistants—it nudges governments and regulators into action, and consumer activists into further pressuring officials to adopt rules that protect the end user.

Meanwhile, Barry simply wanted a train update. His morning commute became a cautionary tale of AI hubris and privacy failure.