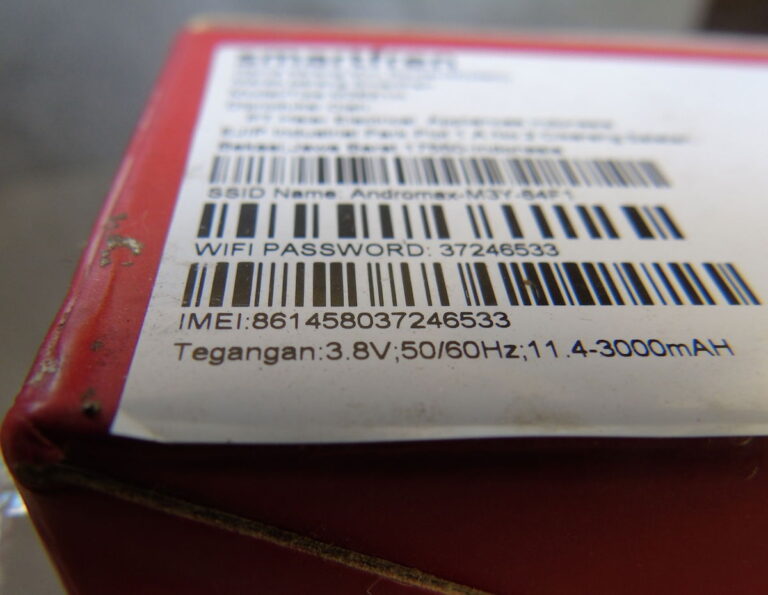

WhatsApp says its new AI features are private, secure, and optional. But in today’s world, when a tech company says, “Trust us,” it’s natural to take it with a grain of salt. The feature in question is called “Private Processing.” It might sound like a buzzword, but it’s an actual attempt to allow AI to assist your messages without exposing your data.

What It Does, And Doesn’t

Private processing allows WhatsApp to offer AI-powered tools such as smart replies and message summaries while keeping your chats end-to-end encrypted. The data used by AI is not sent to Meta or stored. Once the task is done, the information disappears, at least that’s the idea. You have control and can choose to use the feature or not. In group chats, you can see who enabled the AI and block others from using it on your messages. A reassuring setup– on paper.

Privacy Concerns

Privacy experts confirm it is a good start– but not without risks. Even if WhatsApp is not reading your messages, when it processes that data, however briefly, it still opens a small window. Cryptographer Matt Green from Johns Hopkins states that if anything leaves your phone, it can become a target. The bigger issue? We are still relying on trust, so we need to believe that Meta won’t quietly change how things would work later. AI tools are showing up everywhere, from search bars and inboxes to your phone, and most have a catch: they need your data to work.

That is why WhatsApp’s approach is unusual: it is trying to build a smarter AI without asking you to give up more permissions. If it works, it will set a new standard. But if it fails or a loophole is exposed, we will be faced with a generally scary thought: That company says privacy when they actually mean temporary silence. So the question remains the same: In the age of AI, who is really in control— and how do we know?

For more tech news: Click here