The challenge before us is immense but not impossible. Photo by Shutterstock

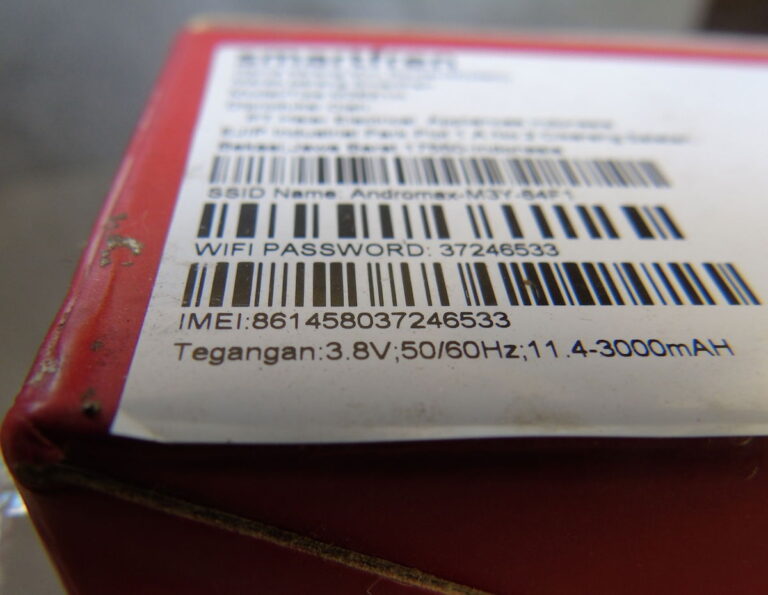

Will.i.am, mostly known for his music, is jumping on the AI train. At a recent CMO breakfast during the Cannes Lions festival, he shared his thoughts on the intersection of privacy and the next technological revolution. His message echoed a now-familiar phrase: “Data is the new oil.” Once a catchy slogan, this idea has become a reality that defines the modern digital economy. Today, some of the most powerful companies in the world are built around collecting, analysing, and monetizing vast amounts of user data.

A recent Business Insider article captured will.i.am’s perspective on AI’s enormous potential but also the critical challenge it faces: privacy.

Privacy Restricts AI’s Potential

Artificial Intelligence, at its core, depends on data. The more data AI systems can access and learn from, the smarter, more responsive, and more innovative they become. Whether it’s tailoring personalised recommendations, detecting patterns in healthcare, or automating complex tasks, AI’s power grows with its data intake.

However, as privacy concerns mount worldwide, and governments introduce stricter regulations such as GDPR in Europe or CCPA in California, the flow of data into AI systems is increasingly restricted. Users are growing more aware and wary of how their personal information is collected and used.

Will.i.am highlighted this paradox plainly: AI requires access to vast streams of data to evolve, yet those very data streams are being locked down by privacy rules and user distrust. As a result, efforts to protect privacy can unintentionally “kill” or at least severely limit the potential of AI. Which can indeed be a good thing, to slow the AI train while we learn its consequences and implement the regulations necessary to protect us.

The Privacy Trust Gap in AI

On one side, users demand control over their personal data and transparency about how it is used. On the other, AI companies rely on massive data collection, often aggregating information from multiple sources, some of which users may not fully understand or consent to.

If users feel that their data is being gathered without full awareness or adequate safeguards, trust erodes. And adoption slows.

The Urgency of Governance

Will.i.am’s call to action is clear: the AI revolution is upon us, but without robust governance frameworks centred on transparency, ethical data use, and genuine user empowerment, AI risks becoming another tool for surveillance capitalism.

To navigate this complexity, cooperation is essential. Governments, technology companies, and users must come together to:

- Define clear and enforceable limits on what data can be collected and how it can be shared

- Ensure companies are transparent about their AI data practices, making disclosures accessible and understandable

- Empower users with meaningful control over their personal information, including easy opt-out mechanisms

- Prevent exploitative or deceptive practices that use data to manipulate or harm individuals

The Stakes Are High

The challenge before us is immense but not impossible. Data really is the new oil, a resource that fuels innovation and economic growth. How society chooses to handle that data will ultimately decide whether AI becomes a tool for empowerment, creativity, and progress, or a mechanism for exploitation, surveillance, and inequality.

Will.i.am’s reflections remind us that balancing privacy and innovation is not just a technical issue but a fundamental social challenge.